AICO: Architecture for a Local AI Companion

AICO is meant to stay. It should follow across devices, grow new abilities, and still feel like *the same companion* years from now. That requirement shows up directly in the architecture: a message-driven platform with clear boundaries, multiple deployment shapes, and a very deliberate CLI‑first development approach.

This article walks through the core ideas behind the architecture:

System → Subsystem → Domain → Module → Component

Message bus and modular services

Roaming and embodiment across devices

Splitting backend, modelservice, and frontend

Why the CLI came first and why it still matters

System > Subsystem > Domain > Module > Component

AICO is structured as a small platform rather than a single app. The hierarchy looks like this:

System – the whole AICO platform.

Subsystems - the backend, modelservice, shared services, studio, cli and frontend

Domains – Core AI, Data, Admin, Extensibility, Frontend, Operations.

Modules – subsystems inside a domain, e.g. Memory, Emotion, Agency, Scheduler, Security, Plugin Manager.

Components – focused building blocks, e.g. Appraisal Engine, Trait Vector, Retrieval Pipeline, Task Runner.

This forces every piece of functionality to “live” somewhere specific. Instead of a ball of Python, you get:

Clear ownership (which domain is responsible?).

Narrow surfaces (what messages can this module send/receive?).

Easier evolution (you can add, replace or refactor one module without rewriting the rest).

That structure is enforced not only in docs but in how services talk to each other.

Message-driven, modular services

At runtime, AICO is made up of several services connected by a CurveZMQ‑encrypted message bus and Protocol Buffers messages:

Backend (FastAPI, Python) – API gateway, orchestrator, task scheduler, entry point for frontends.

Modelservice – dedicated process that owns LLMs, embeddings, emotion/sentiment models, TTS and related pipelines.

Shared library – the cross-cutting Python package (memory system, knowledge graph, security, AMS, utilities).

Frontend (Flutter) – the UI, with its own encrypted local store and WebSocket connection to the backend.

Studio (React, WIP) – an admin UI that plugs into the same APIs and message bus.

Everything important flows across the bus as typed Protobuf messages. That solves a few problems early:

Hard boundaries – you cannot just "reach into" another module’s database; you have to send a message.

Security by default – the bus is encrypted end-to-end via CurveZMQ, with mutual authentication.

Observability – messages are loggable and replayable; background tasks can inspect them.

Instead of a fragile chain of direct calls, you get something closer to a small distributed system – even if everything runs on the same machine.

What actually runs inside the modelservice

The modelservice exists so that "what AICO can think and say" is cleanly separated from "how AICO is wired and deployed".

Concretely, it hosts:

Core conversation LLMs – the main dialog models that generate responses.

Embeddings pipelines – semantic vector generation for retrieval across working memory, semantic memory and the knowledge graph.

Entity and intent models – zero‑/few‑shot entity recognition and intent classification that turn raw text into structured signals.

Sentiment and emotion models – classifiers that feed into the emotion system and Memory Album.

Text‑to‑speech engines – fast and high‑quality voices that give the companion a presence beyond text.

All of this is kept in a dedicated service for a few reasons:

The rest of the system talks to "capabilities" (summarise, embed, detect entities, speak) instead of wiring models directly.

Different hardware profiles (CPU‑only laptop vs. GPU home lab vs. cloud tenant) can swap model configurations without touching backend or frontend code.

Experimental models can be added, A/B‑tested and rolled back behind a stable API while the user experience remains consistent.

Roaming and embodiment: one companion, many bodies

AICO is meant to be more like a person than like an app: it can appear in different places, but it stays itself. Architecturally, that means separating where the brain lives from where the body shows up.

Today that looks like this:

Backend + Modelservice can run on:

a single laptop for local experiments,

a home lab or server,

or a self-controlled cloud account.

Frontends can run on:

desktop and laptop,

phone or tablet,

in the future: AR/VR, smart displays, holographic projectors, robots or other devices.

The message bus and API layer bridge those worlds. As long as a device can reach the backend securely, the same AICO instance – with the same three-tier memory, knowledge graph, emotion state and agency – can be embodied in different UIs and form factors.

This is the basis for roaming:

AICO may speak through the Mac on the desk today,

later appear as an avatar on a tablet in the living room,

Eventually live inside a headset or spatial environment – while carrying the same memories and personality.

Because state lives in the core system (encrypted databases, LMDB, ChromaDB, libSQL, knowledge graph), not in any specific device UI, embodiment can change without losing the relationship.

The storage stack is intentionally conservative: encrypted relational storage for long‑lived data, LMDB for fast working memory, and a vector store for semantic retrieval. Combined with the message bus, this makes AICO behave like a coherent, self‑contained information system that can be moved between machines without losing continuity.

On top of this sits a task scheduler that runs regular background work: memory consolidation, graph maintenance, log hygiene, health checks and other infrastructure tasks. This keeps the system in a state where it can run reliably for years, not just weeks.

Splitting components across devices

The same architecture supports different deployment shapes without changing the code:

All-in-one local setup

- Backend, modelservice, and frontend run on one machine.

- Simple for initial setup, strong privacy, great for tinkerers.

Home lab + roaming clients

Backend and modelservice run on a home server or lab machine.

Frontends connect from laptops, phones, tablets over the local network or VPN.

The family can share an AICO instance while data stays inside the home.

Self-hosted cloud core

Backend and modelservice run in the user’s own cloud tenant.

Frontends connect from anywhere.

Useful when GPUs or higher availability are needed, still without handing data to a multi-tenant SaaS.

Underneath, it is always the same system:

ZeroMQ bus and FastAPI gateway

Encrypted libSQL and LMDB stores

ChromaDB + knowledge graph

Scheduler, AMS, emotion system, and agency framework

The only thing that changes is *where* each component runs.

Memory and data architecture: built to remember individuals

Architecture is only useful for a companion if it supports a rich, stable inner life. AICO's memory and data design is heavily influenced by complementary learning systems (CLS) from cognitive science: the idea that fast, context‑sensitive learning and slower, structural learning need to coexist.

In practice this shows up as three cooperating layers:

Working memory (LMDB) – a fast, time‑boxed store for recent dialogue and short‑term context. It behaves a bit like a hippocampus: quick to learn, quick to forget.

Semantic memory (ChromaDB + BM25) – a hybrid retrieval layer over longer‑term knowledge, notes and facts, combining embeddings and keyword statistics.

Knowledge graph (libSQL + graph engine) – a property graph of people, entities, events and relationships, with temporal edges and analytics. This is closer to a structured, slowly evolving "map of your world".

Above these sits the Adaptive Memory System (AMS), which schedules consolidation, tracks behavioural signals and decides what should move from fast to slow memory. It also supports bandit‑style learning for which skills or retrieval strategies work best for a given person.

Separate from the automated tiers, the Memory Album captures user‑curated moments – important conversations, bookmarked messages, episodes with strong emotional tone – so that not everything depends on implicit algorithms. Together, these pieces allow AICO to build a history that is both machine‑usable and personally meaningful.

Why AICO was built CLI-first

One of the more opinionated choices in AICO is the CLI‑first approach.

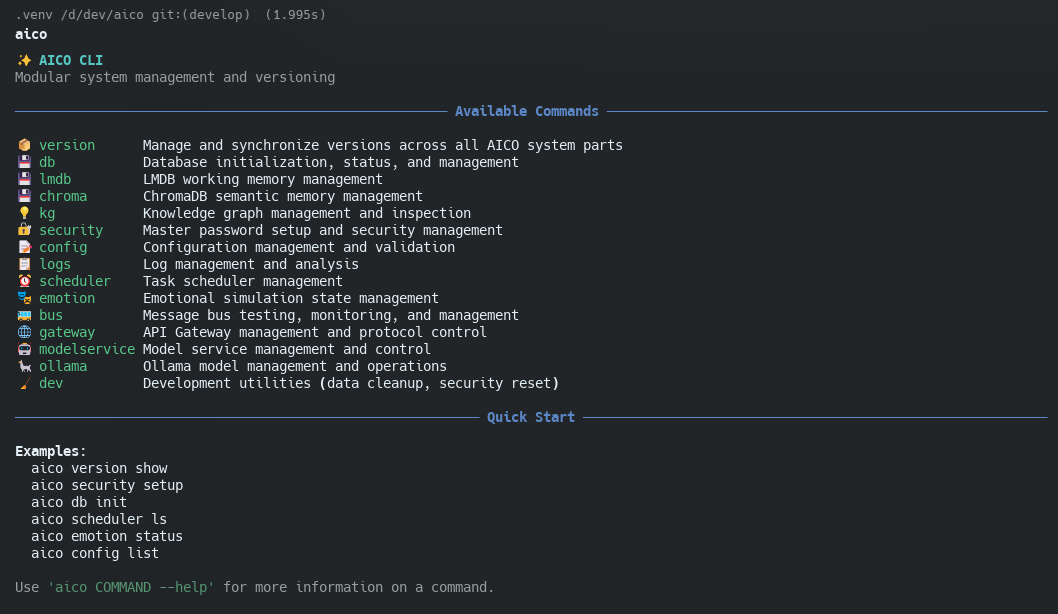

Before investing in Studio or polishing the main UI, the project built a production-ready CLI (Typer + Rich, packaged via PyInstaller). It now ships with 15+ command groups and 100+ subcommands, including:

`version` – version and build information across AICO components,`db` – encrypted database initialization, status and management,`lmdb` – working‑memory (LMDB) inspection and maintenance,`chroma` – semantic memory (ChromaDB) management,`kg` – knowledge graph management and inspection,`security` – master password setup, key management and transport encryption,`config` – configuration management and validation,`logs` – log management and analysis,`scheduler` – task scheduler control and scheduled job inspection,`emotion` – emotional simulation state and history tools,`bus` – message bus testing, monitoring and diagnostics,`gateway` – API gateway lifecycle and protocol control,`modelservice` – model service lifecycle and diagnostics,`ollama` – local LLM and model management,`dev` – development utilities for data cleanup and debugging.

The AICO command line interface - main help page.

This was not an accident. CLI‑first gives a few concrete advantages:

Faster iteration – New behavior can be implemented as a CLI command and wired into the bus and database long before any UI exists.

Reproducible operations – Any diagnostic or recovery sequence is just a series of commands that can be scripted, versioned, and shared.

Better invariants – Features must be coherent at the system level (bus, database, scheduler) before they get a friendly surface.

Headless deployments – Running AICO in a lab or server environment is just as first-class as running it on a laptop with UI.

In practice, almost every serious change in AICO starts as:

Extend the shared library and backend/modelservice logic.

Expose that capability via a CLI command.

Use the CLI to exercise it, log it, and harden it.

Only then add UI affordances in Flutter or Studio.

The result is a system that feels like a tool you can *operate*, not just an app you can *click*.

Architecture in service of relationship

All of these decisions – hierarchy, bus, modularity, roaming, CLI‑first – serve one goal: a companion that can grow and stay with you.

Stability over years – The system can evolve (new modules, new models, new UIs) without throwing away existing memory or emotional history.

Embodiment without lock-in – AICO can move from screen to headset to spatial device without changing who it is.

User control – Local-first and self-hosted deployments keep data under the user’s or family’s control.

Serious infrastructure behind a soft surface – The UI is meant to feel gentle and human, while the internals behave like infrastructure you can debug, script and trust.

If AICO is going to be more than a temporary app, the architecture has to be designed for that from the start. That is why it looks more like a small platform than a single program – so that the relationship can outlive any one device or interface.